Adaptive boosting (AdaBoost) is a term used in artificial intelligence, big data and smart data. AdaBoost is a method in the field of machine learning that helps to make particularly precise predictions.

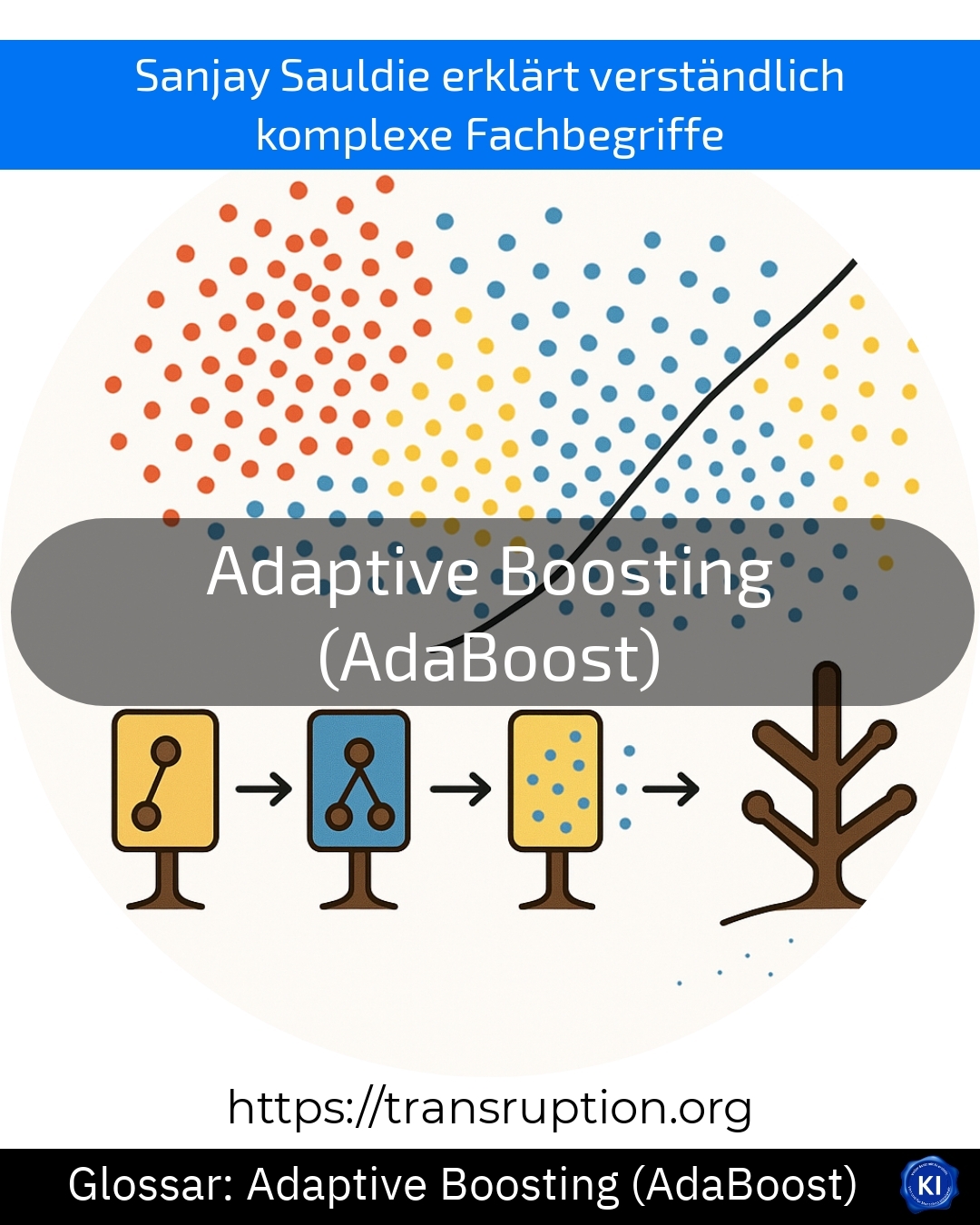

Imagine you want to recognise faulty products on an assembly line. However, individual, simple inspectors (small algorithms) that are responsible for this make many errors. Adaptive Boosting (AdaBoost) combines many of these simple inspectors in such a way that they complement each other. Each individual checker only makes small but important decisions. AdaBoost assigns a weighting to these checkers: checkers that are reliable are given more weight. In the end, the combined decision of all validators produces a significantly better result.

Adaptive Boosting (AdaBoost) allows errors to be detected more quickly and reliably, even if the individual checkers are not perfect. In practice, AdaBoost is used, for example, to improve spam filters in the email sector or to recognise patterns in large amounts of data. Adaptive boosting therefore makes machines more intelligent - and they make fewer wrong decisions.